This version of SWEHB is associated with NPR 7150.2B. Click for the latest version of the SWEHB based on NPR7150.2D

1. Requirements

4.4.8 The project manager shall validate and accredit software tool(s) required to develop or maintain software.

1.1 Notes

NPR 7150.2, NASA Software Engineering Requirements, does not include any notes for this requirement.

1.2 Applicability Across Classes

If Class D software is safety critical, this requirement applies to the safety-critical aspects of the software.

Class |

A |

B |

C |

CSC |

D |

DSC |

E |

F |

G |

H |

|---|---|---|---|---|---|---|---|---|---|---|

Applicable? |

|

|

|

|

|

|

|

|

|

|

Key: ![]() - Applicable |

- Applicable | ![]() - Not Applicable

- Not Applicable

2. Rationale

Software development tools contain software defects. Commercial software development tools do have software errors. Validation and accreditation of the critical software development and maintenance tools ensure that the tools being used during the software development life cycle do not generate or insert errors in the software executable components. This requirement reduces the risk in the software development and maintenance areas of the software life cycle by assessing the tools against defined validation and accreditation criteria. The likelihood that work products will function properly is enhanced and the risk of error is reduced if the tools used in the development and maintenance processes have been validated and accredited themselves. This is particularly important for flight software (Classes A and B) which must work correctly with its first use if critical mishaps are to be avoided.

3. Guidance

Project managers are to use validated and accredited software tool(s) to develop or maintain software. Software is validated against test cases using tools and test environments that have been tested and known to produce acceptable results. Multiple techniques for validating software may be required based on the nature and complexity of the software being developed (see SWE-029). This process involves comparing the results produced by the software work product(s) being developed against the results from a set of ‘reference’ programs or recognized test cases. Care must be taken to ensure that the tools and environment used for this process should induce no error or bias into the software performance. Validation is achieved if there is agreement with expectations and no major discrepancies are found. Software tool accreditation is the certification that a software tool is acceptable for use for a specific purpose. Accreditation is conferred by the organization best positioned to make the judgment that the software tool in question is acceptable. The organization may be an operational user, the program office, or a contractor, depending upon the purposes intended. Validation and accreditation of software tool(s) required to develop or maintain software is particularly necessary in cases where:

- Complex and critical interoperability is being represented.

- Reuse is intended.

- Safety of life is involved.

- Significant resources are involved.

- Flight software is running on new processors where a software development tool may not have been previously validated by an outside organization.

The targeted purpose of this requirement was the validation and accreditation of software tools that directly affect the software being developed. Examples of software tools that directly affect the software code development include but are not limited to compilers, code-coverage tools, development environments, build tools, user interface tools, debuggers, and code generation tools. For example, if your project is using a code generation tool, the code output of that code generation tool is validated and accredited to verify that the generated code does not have any errors or timing constraints that could affect execution. Another example is the use of math libraries associated with a compiler. These linked libraries are typically validated and accredited prior to use in the executable code.

This requirement does not mean that a project needs to validate and accredit a software problem reporting tool or software defect database or a software metric reporting tool. These types of indirect tools can be validated by use. It is also recognized that formal accreditation of software development/maintenance tools is often provided by organizations outside NASA (e.g., National Institute of Standards and Technology [NIST], Association for Computing Machinery [ACM]) for many common software development tools such as compilers. It could be prohibitively expensive or impossible for NASA projects to perform equivalent accreditation activities.

Accreditation is conferred by the organization in the best position to make the judgment that the software tool in question is acceptable. Someone on the project needs to be able to determine an acceptable level of validation and accreditation for each tool being used on a software project and be willing to make a judgment that the tool is acceptable for the tool's intended use. Most of the time that decision can be, and is, made by the software technical authority. The acceptance criteria and validation approach can be captured and recorded in a software development plan, software management plan, or software maintenance plan.

Often the software work products being developed are based on new requirements and techniques for use in novel environments. These may require the development of supporting systems, i.e., new tools, test systems, and original environments, none of which have themselves ever been accredited. The software development team may also plan to employ accredited tools that have not been previously used or adapted to the new environment. The key is to evaluate and then accredit the development environment and its associated development tools against another environment/tool system that is accredited. The more typical case regarding new software development is the new use-case of bringing tools (otherwise previously accredited) together in a development environment (otherwise previously accredited) that have never been used together before (and not accredited as an integrated system).

A caveat exists that for many new NASA projects, previous ensembles of accredited development environments and tools just don’t exist. For these cases, projects must develop acceptable methods to assure their supporting environment is correct.

The flow of a software accreditation protocol or procedure typically requires an expert to conduct a demonstration and to declare that a particular software work product exhibits compatible results across a portfolio of test cases and environments.

Software can also be accredited by using industry accreditation tools. Engineers can be trained and thus certified in the correct use of the software accreditation tools.

Many of the techniques used to validate software (see SWE-055) can be applied by the software development team to validate and accredit their development tools, systems, and environments. These include the following:

- Functional demonstrations.

- Formal reviews.

- Peer reviews/ component inspections.

- Analysis.

- Use case simulations.

- Software testing.

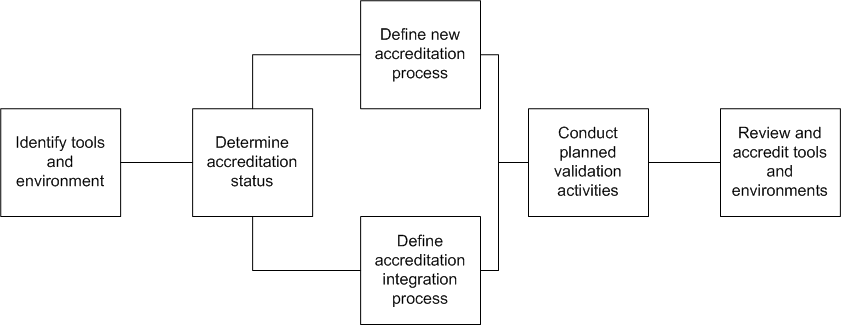

One suggested flow for identifying, validating, accepting, and accrediting new development tools and environments to be used to develop software work products subject to this requirement is shown in Figure 3.1. While it may seem obvious, it is important to review the current and expected development tools (compilers, debuggers, code generation tools, code-coverage tools, and others planned for use) and the development environments and release procedures, to assure that the complete set is identified, understood and included in the accrediting process.

Figure 3.1 – Sample Accreditation Process Flow

Once you have the complete list, the next step in the process is to review the accreditation status of each item. Ask the following questions:

- Is the accreditation current?

- Does the accreditation need to be re-certified?

- Is the accreditation applicable to the current development task?

- Will its accreditation continue throughout the development life cycle?

For tools that need accreditation, use the information in the preceding paragraphs to develop a new accreditation process. Keep in mind that the goal is to show that the tools will do what the project expects them to do. The Project team needs to review and accept the new process, and the software technical authority and the Center's software assurance personnel are to be informed of it. When the integration of accredited tools needs its own accrediting process, that planning is conducted using the same steps.

The actual steps and tasks in the process are not specified in this guidance because of the wide variety of tools and environments that currently exist or will have to be developed. They are left for the project and the software development team to develop. Once the Project Team has planned and approved the new and integrating accreditation processes, the appropriate validation activities are conducted. Center software assurance organization representative(s) are informed and their observation of the validation processes are accounted for in the activities. Results, process improvements, lessons learned, and any discrepancies that result are documented and retained in the project configuration management system. Any issues or discrepancies are re-planned and tracked to closure as part of the regular project's corrective and preventive action reporting system.

The final activity in the accreditation process is an inspection and peer review of the validation activity results. Once agreement is reached on the quality of the results (what constitutes "agreement" is decided ahead of time as a part of the process acceptance planning), the identified tools and environments can be accepted as accredited.

Additional guidance related to software tool accreditation may be found in the following related requirements in this Handbook:

4. Small Projects

Small projects with limited resources may look to software development tools and environments that were previously accredited on other projects. Reviews of project reports, Process Asset Libraries (PAL)s, and technology reports may surface previous use cases and/or acceptance procedures that are applicable to new tools pertinent to the current project.

5. Resources

- (SWEREF-082) NPR 7120.5F, Office of the Chief Engineer, Effective Date: August 03, 2021, Expiration Date: August 03, 2026,

- (SWEREF-157) CMMI Development Team (2010). CMU/SEI-2010-TR-033, Software Engineering Institute.

- (SWEREF-197) Software Processes Across NASA (SPAN) web site in NEN SPAN is a compendium of Processes, Procedures, Job Aids, Examples and other recommended best practices.

- (SWEREF-224) ISO/IEC 12207, IEEE Std 12207-2008, 2008. IEEE Computer Society, NASA users can access IEEE standards via the NASA Technical Standards System located at https://standards.nasa.gov/. Once logged in, search to get to authorized copies of IEEE standards.

- (SWEREF-271) NASA STD 8719.13 (Rev C ) , Document Date: 2013-05-07

- (SWEREF-273) NASA SP-2016-6105 Rev2,

- (SWEREF-278) NASA-STD-8739.8B, NASA TECHNICAL STANDARD, Approved 2022-09-08 Superseding "NASA-STD-8739.8A"

- (SWEREF-370) ISO/IEC/IEEE 15289:2017. NASA users can access ISO standards via the NASA Technical Standards System located at https://standards.nasa.gov/. Once logged in, search to get to authorized copies of ISO standards.

- (SWEREF-695) The NASA GSFC Lessons Learned system. Lessons submitted to this repository by NASA/GSFC software projects personnel are reviewed by a Software Engineering Division review board. These Lessons are only available to NASA personnel.

5.1 Tools

Tools to aid in compliance with this SWE, if any, may be found in the Tools Library in the NASA Engineering Network (NEN).

NASA users find this in the Tools Library in the Software Processes Across NASA (SPAN) site of the Software Engineering Community in NEN.

The list is informational only and does not represent an “approved tool list”, nor does it represent an endorsement of any particular tool. The purpose is to provide examples of tools being used across the Agency and to help projects and centers decide what tools to consider.

6. Lessons Learned

No Lessons Learned have currently been identified for this requirement.